Content Marketing

Keep AI in Check: A Managing Editor’s Checklist for Fact-Checking AI Outputs

In the blink of an eye, artificial intelligence tools have become an important part of content marketing strategies. New generative AI platforms designed for marketers seem to pop up every week, all pledging to enhance efficiency and boost productivity. While these tools are capable of delivering on many of those promises, it’s important to remember they need to be subjected to the same rigorous quality control measures that content managers have used since the very beginning.

After all, bland, uninformative AI content won’t help your marketing strategy. But publishing material riddled with errors is one of the fastest ways to damage your business’s reputation. That’s why managing editors need to implement controls and processes for fact-checking AI is vital when using generative AI tools.

The Importance of Fact-Checking AI

By now, you’ve probably read many pearl-clutching articles about how an easily verifiable mistake during Google Bard’s first public demo caused the company’s stock price to tumble over 7%, costing the company (gasp) over $100 billion.

In all fairness to Bard, this mistake was pretty mild, as was the high-profile mistake made by CNet’s in-house content AI, which involved (among other things) a faulty compound interest calculation. Both errors were embarrassing, but the embarrassment was heightened due to the fact that AI was involved.

Minor mistakes like this happen all the time in the world of content writing. That’s why you should always have a review process to verify that basic facts and claims in your content are correct, no matter how you’re producing that material. Generative AI may be able to speed up your content creation process, but there’s simply no substitute for editing and review. Even a minor mistake can undermine your reputation and cause your customers, clients, and partners to lose faith in your brand.

Fact-Checking AI Hallucinations

Spend any amount of time using generative AI, and you’re bound to run into an example of AI hallucinations, which is a fancy way of saying that sometimes AI makes up things that aren’t real.

Mistakes like the ones made by Google and CNet’s content AIs involved relatively obvious and quickly verified errors. But what makes AI hallucinations so challenging to deal with is they frequently sound credulous despite being completely fabricated. Without a strict process in place for fact-checking AI, they are quite easy to miss, especially for someone who isn’t a subject matter expert.

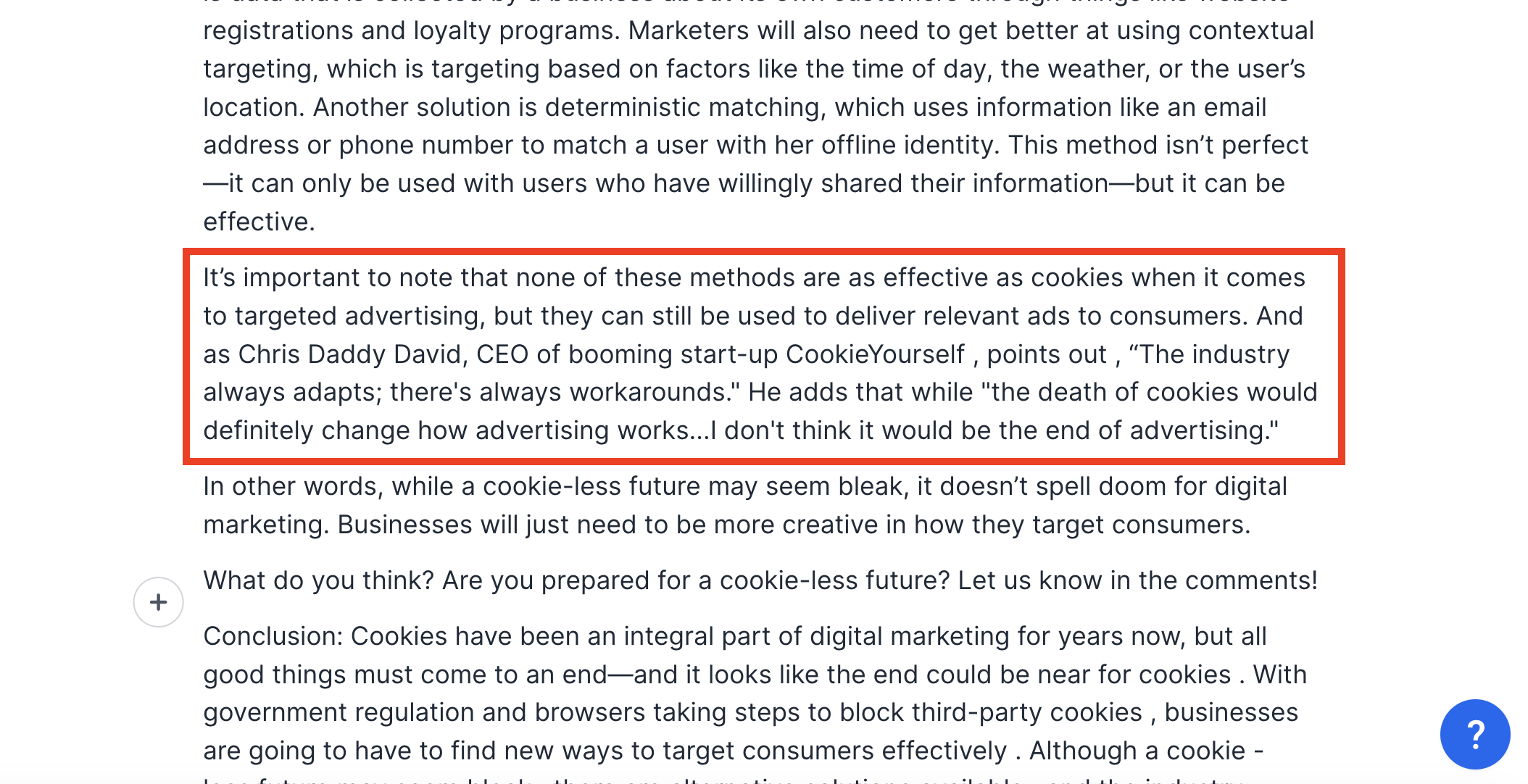

My very first encounter with generative AI came while reviewing an article produced by a colleague using a prompt about Google’s third-party cookies. Overall, I was impressed with the output until I started coming across quotes. The first was an inferred quote from a real marketing industry expert, but it associated him with an organization that, upon fact-checking, he did not work with.

That’s a problem, I thought, but not a huge one. It’s a mistake a junior copywriter could easily make, and honestly, one that would probably slip by many reviewers. But then I came across this full-blown AI hallucination just a paragraph later:

“And as Chris Daddy David, CEO of booming start-up CookieYourself, points out, ‘The industry always adapts; there’s always workarounds.’ He adds that while ‘the death of cookies would definitely change how advertising works…I don’t think it would be the end of advertising.'”

First and foremost, you’ll notice one of the hallmarks of AI hallucination here. While the quote makes grammatical sense and is sort of related to the topic, it doesn’t actually say anything specific or useful.

Secondly, despite being bland and bereft of value, this is a highly specific quote. Surely Chris Daddy David said something like this at some point? And surely there must be a lot of information about CookieYourself since it’s a “booming” start-up, right?

Nope. After spending about 30 minutes trying to track down both online, I’m convinced that neither one exists. (Although there is a well-respected drummer named Chris “Daddy” Dave, it doesn’t look like he ever ran a company called CookieYourself.)

What’s going on here? Well, the AI has absorbed enough training material to know that in this type of content, there should be a direct quote in the article. But the AI doesn’t actually understand why the quote should be there, what it should say, or what makes it relevant. Like all content AI, it’s mimicking a format. Since most generative AIs don’t actually lift content directly from existing material, this one created something that “looked” correct from a format standpoint and then moved on.

A Managing Editor’s Checklist for Fact-Checking AI Outputs

While we never ended up using this AI-generated article, it taught me a lot about how the technology works and how managing editors can fact-check AI outputs more effectively. Here are a few best practices to put in place as you begin to use this new technology:

1. Create a Content Brief

At Contently, we assign content to freelancers with a comprehensive brief like the one above. If it’s not detailed, the freelancer likely won’t be able to produce a quality draft without more guidance. The same is true for our robot friends.

The quality of content AI outputs is highly dependent upon the information they have to work with. Gathering good data points and research related to the topic you want to write about can greatly limit the AI’s tendency to hallucinate or create misleading material. Put together an AI content brief featuring a list of quotes, data, and facts to incorporate into your writing prompts, and you may get better results with AI.

2. Craft targeted prompts

Speaking of prompts, generative AI tends to provide better outputs when tasked to write narrow, highly specific content. Just as content managers frequently provide copywriters with an outline, creating a series of structured prompts can help the AI stay on topic and prevent it from straying too far from the data and information you provide.

3. Flag potential hallucinations

Generative AI tools can still engage in flights of fancy even when you provide them with good information to work with. When fact-checking AI outputs, always be on the lookout for statements that don’t sound quite right or make a strong claim with little supporting (or irrelevant) evidence. Flag anything that raises an eyebrow, even if it seems like a minor point. After an initial review, you can go back and verify that the statements are accurate.

4. Check for bias

Since AI platforms are trained on datasets produced by humans, they can potentially adopt many of the biases present in that data. The way prompts are phrased can also cause the AI to provide biased outputs. Sometimes, a single word or phrase is all it takes for the AI to make assumptions that produce biased outputs.

5. Review all sources

When fact-checking AI outputs, never take anything for granted. While newer content AI tools can pull data from the internet to craft their responses, they don’t actually know how to evaluate those sources in any critical fashion. Their perception of accuracy is an algorithmic one, not a qualitative one. This can cause them to cite sources that repeat common misperceptions or outdated information.

Verifying sources has always been a vital part of the content review process. But when using generative AI to produce content, it’s more important than ever to check that every claim is factual and supported by good sources.

Generative AI Is Still Learning, So Treat It Like a Student

Before I became a copywriter and content strategist, I worked as a high school teacher and college writing tutor. During those years, I saw countless papers riddled with inaccuracies, unverified claims, and misleading omissions of fact. It wasn’t the students’ fault; most had never written anything longer than a paragraph, and those who did had learned to do so through a very rigid, formulaic approach.

Reviewing those papers forced me to be rigorous when it came to fact-checking and verifying claims. That same approach has proved extremely helpful when fact-checking AI output. It’s ironic that so many students are turning to tools like ChatGPT to create their papers because generative AI commits many of the same errors that so frequently undermine their writing.

Just because AI platforms present a new way of creating marketing content doesn’t mean that existing review and quality control processes can be set aside. These tried-and-true strategies are more than up to the task of fact-checking AI outputs. This content should be subjected to the same level of scrutiny you would give to writing produced by a human.

To learn more about Contently’s Managing Editors, contact us today for a discovery call.

The Content Strategist provides tips and strategies for content marketers on a variety of topics, including how to use AI responsibly. Subscribe today to get these insights delivered straight to your inbox!

Image by Moor Studio

Get better at your job right now.

Read our monthly newsletter to master content marketing. It’s made for marketers, creators, and everyone in between.