Digital Transformation

What if Content AI Was Actually Smart?

A few years ago, a client asked me to train a content AI to curate good content for a newsletter sent to more than 20,000 C-suite leaders. At that point in time, I was curating 20 well-written business articles from dozens of third-party publications. My client wanted the content AI to pick the articles instead, with the ultimate goal of fully automating the newsletter.

The end result was… mediocre. The AI could surface articles that were similar to ones the audience had engaged with in the past, but we couldn’t make it smart — which is another way of saying we couldn’t teach it to recognize the ineffable nature of a fresh idea or a dynamic way of talking about it.

Ultimately, my client pulled the plug on the AI project and eventually on the newsletter itself. But I’ve been thinking about that experience as large language models (LLMs) like GPT-4o by OpenAI continue to gain broader mainstream attention.

I wonder if we would have been more successful today using an API into GPT-4o to identify “good” articles.

GPT-4 underpins content AI solutions like ChatGPT and Jasper.ai, which have an impressive ability to understand language prompts and craft cogent text at lightning speed on almost any topic. But there is a negative side to content AI: the clever content they produce can feel generic and they often make stuff up. Impressive as they are in terms of speed and fluency, the large language models today don’t think or understand the way humans do.

But what if they did? What if AI developers solved the current limitations of content AI? Or, put another way, what if content AI was actually smart? Let’s walk through a few ways in which they are already getting smarter and how content professionals can use these content AI advances to their advantage.

5 ways content AI is getting smarter

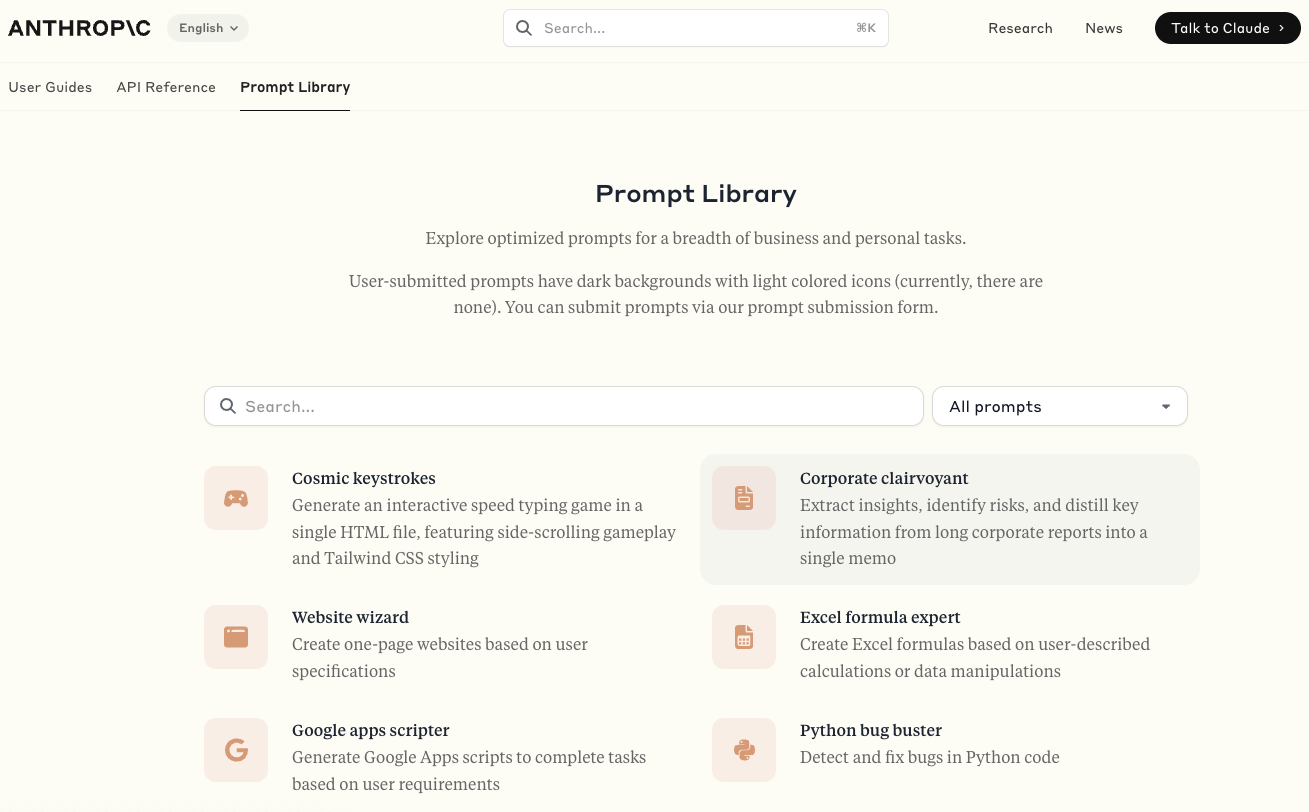

To understand why content AI isn’t truly smart and how it’s getting smarter, it helps to recap how large language models work. GPT-4 and “transformer models” like Gemini by Google, Claude by Anthropic, or Llama by Meta are deep learning neural networks that simultaneously evaluate all of the data (i.e., words) in a sequence (i.e., sentence) and the relationships between them.

To train them, the AI developers used web content, which provided far more training data with more parameters than before, enabling more fluent outputs for a broader set of applications. Transformers don’t understand those words, however, or what they refer to in the world. The models can simply see how they are often ordered in sentences and the syntactic relationship between them.

As a consequence, generative AI works today by predicting the next words in a sequence based on millions of similar sentences it has seen before. This is one reason why “hallucinations” — or made-up information — as well as misinformation are so common with large language models. These tools are simply creating sentences that look like other sentences they have seen in their training data. Inaccuracies, irrelevant information, debunked facts, false equivalencies — all of it — will show up in generated language if it exists in the training language. Many AI experts even think hallucinations are inevitable.

And yet, you can mitigate them. In fact, today’s large language models hallucinate less often than their predecessors, as shown in this inventive hallucination “leader board.” In addition, both data scientists and users have several solutions for reducing them.

Solution #1: AI Content prompting

Anyone who has tried an AI app is familiar with prompting. Basically, you tell the tool what you want to write and sometimes how you want to write it. There are simple prompts, such as, “List the advantage of using AI to write blog posts.”

Prompts can also be more sophisticated. For example, you can input a sample paragraph or page of text written according to your firm’s rules and voice and prompt the content AI to generate subject lines or social copy or a new paragraph in the same voice and using the same style.

Prompts are a first-line method for setting rules that narrow the output from content AI. Keeping your prompts focused, direct, and specific limits the chances that the AI will generate off-brand and misinformed copy.

Organizations are also experimenting with a form of prompt engineering called retrieval augmented generation, or RAG. With RAG-enhanced prompts, users point the model to fulfill the prompt using a specific source of information, often one that is not part of the original training set.

RAG does not 100% prevent hallucinations, but it can help content experts catch inaccuracies because they know what content the AI used to come up with an answer.

For more guidance on prompting techniques, check out this piece for content marketers on writing AI prompts, or read about researcher Lance Elliot’s nine rules for composing prompts to limit hallucinations.

Solution #2: “Chain of thought” prompting

Consider how you would solve a math problem or give someone directions in an unfamiliar city with no street signs. You would probably break down the problem into multiple steps and solve for each, leveraging deductive reasoning to find your way to the answer.

Chain of thought prompting leverages a similar process of breaking down a reasoning problem into multiple steps. The goal is to prime the LLM to produce text that reflects something resembling a reasoning or common-sense thinking process.

Scientists have used chain of thought techniques to improve LLM performance on math problems as well as on more complex tasks, such as inference — which humans automatically do based on their contextual understanding of language. Experiments show that with chain of thought prompts, users can produce more accurate results from LLMs.

Some researchers are even working to create add-ons to LLMs with pre-written prompts and chain-of-thought prompts so that the average user doesn’t need to learn how to do them.

Solution #3: Fine-tuning content AI

Fine-tuning involves taking a pre-trained large language model and training it to fulfill a specific task in a specific field by exposing it to relevant data for that field and eliminating irrelevant data.

A fine-tuned data language model ideally has all the language recognition and generative fluency of the original but focuses on a more specific context for better results.

There are hundreds of examples of fine-tuning for tasks like legal writing, financial reports, tax information, and so on. By fine-tuning a model using writings on legal cases or tax returns and correcting inaccuracies in generated results, an organization can develop a new tool that can draft clever content with fewer hallucinations.

If it seems implausible that these government-driven or regulated fields would use such untested technology, consider the case of a Colombian judge who reportedly used ChatGPT to draft his decision brief (without fine-turning).

Solution #4: Specialized model development

Many view fine-tuning a pre-trained model as a faster and less expensive approach compared with building new models. It’s not the only way, though. With enough budget, researchers and technology providers can also leverage the techniques of transformer models to develop specialized language models for specific domains or tasks.

For example, a group of researchers working at the University of Florida and in partnership with Nvidia, an AI technology provider, developed a specialized health-focused large language model to evaluate and analyze language data in the electronic health records used by hospitals and clinical practices.

The result was GatorTron, reportedly the largest-known LLM designed to evaluate the content in clinical records. The team has already developed a related model based on synthetic data, which alleviates privacy worries from using AI content based on personal medical records.

A recent experiment using the model to produce doctor’s notes resulted in AI-generated content that human readers could not identify as such 50% of the time.

Solution #5: Add-on functionality

Generating content is often part of a larger workflow within the business. Instead of stopping with the content, some developers are adding functionality on top of the content for greater value-add.

For example, researchers are trying to develop prompting add-ons so that everyday users don’t have to learn how to prompt well.

That’s just one example. Another comes from Jasper, whose Jasper for Business enhancements are a clear bid for enterprise-level contracts. These include a user interface that lets users define and apply their organization’s “brand voice” to all the copy they create. Jasper has also developed bots that allow users to use Jasper inside enterprise applications that require text.

Another solution provider called ABtesting.ai layers web A/B testing capabilities on top of language generation to test different variants of web copy and CTAs to identify the highest performer.

Next steps for leveraging content AI

The techniques I’ve described so far are enhancements or workarounds of the foundational models. As the world of AI continues to evolve and innovate, however, researchers will build AI with abilities closer to real thinking and reasoning.

The Holy Grail of “artificial generation intelligence” (AGI) — a kind of meta-AI that can fulfill a variety of different computational tasks — is still alive and well. Others are exploring ways to enable AI to engage in abstraction and analogy.

The message for humans whose life and passion is good content creation: AI is going to keep getting smarter. But we can “get smarter,” too.

I don’t mean that human creators try to beat an AI at the kind of tasks that require massive computing power. But for the time being, the AI needs prompts and inputs. Think of those as the core ideas about what to write. And even when a content AI surfaces something new and original, it still needs humans who recognize its value and elevate it as a priority. In other words, innovation and imagination remain firmly in human hands. The more time we spend using those skills, the wider our lead.

Learn more about content strategy every week. Subscribe to The Content Strategist newsletter for more articles like this sent directly to your inbox.

Image by PhonlamaiPhotoGet better at your job right now.

Read our monthly newsletter to master content marketing. It’s made for marketers, creators, and everyone in between.